Conversational Agents to Support Collaborative Learning

Project Overview

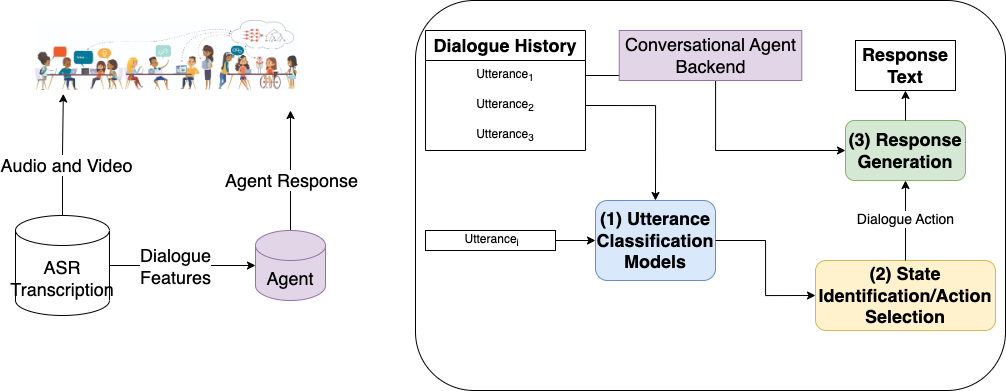

With a system for transcribing student utterances in place, the next question that the NSF iSAT group has sought to address is: when should we intervene during student interactions, and if we do intervene, how should we? Specifically, we are interested in understanding how conversational agents can facilitate collaboration between students when they are working in small groups. To go about this, I have collaborated with educators and human-teaming experts to design a dialog policy-driven conversational agent that behaves in a manner consistent with how a teacher would behave in different scenarios. We have students work together in groups of three to complete a Jigsaw worksheet where each student is an expert in a type of sensor (moisture, sound, environment) and they have to share the knowledge they have with others in the group. Our Jigsaw Interactive Agent (JIA) will ideally facilitate conversations to keep the collaboration going when the teacher cannot attend to all groups at once. I have gone through classroom recordings with these experts to identify (1) when they would intervene in the classroom (2) how they would describe that classroom scenario and (3) how they would intervene to best support students. Together, we came up with a set of initial “dialog states” that describe the current conversation status and corresponding “dialog actions” that describe how the teacher would respond. I am using the dialog actions to fine-tune large language models (LLMs) in a manner that is more aligned with the behavior of a teacher than an out-of-the-box LLM. The entire system (the rule/action mapping and LLMs) is being evaluated through a lab study conducted in three phases: Wizard-of-Oz trials where a teacher responds to the students, a trial where the agent responds using our dialog policy but with pre-programmed responses, and finally later this spring we will run trials with the LLMs responding to students.

With a system for transcribing student utterances in place, the next question that the NSF iSAT group has sought to address is: when should we intervene during student interactions, and if we do intervene, how should we? Specifically, we are interested in understanding how conversational agents can facilitate collaboration between students when they are working in small groups. To go about this, I have collaborated with educators and human-teaming experts to design a dialog policy-driven conversational agent that behaves in a manner consistent with how a teacher would behave in different scenarios. We have students work together in groups of three to complete a Jigsaw worksheet where each student is an expert in a type of sensor (moisture, sound, environment) and they have to share the knowledge they have with others in the group. Our Jigsaw Interactive Agent (JIA) will ideally facilitate conversations to keep the collaboration going when the teacher cannot attend to all groups at once. I have gone through classroom recordings with these experts to identify (1) when they would intervene in the classroom (2) how they would describe that classroom scenario and (3) how they would intervene to best support students. Together, we came up with a set of initial “dialog states” that describe the current conversation status and corresponding “dialog actions” that describe how the teacher would respond. I am using the dialog actions to fine-tune large language models (LLMs) in a manner that is more aligned with the behavior of a teacher than an out-of-the-box LLM. The entire system (the rule/action mapping and LLMs) is being evaluated through a lab study conducted in three phases: Wizard-of-Oz trials where a teacher responds to the students, a trial where the agent responds using our dialog policy but with pre-programmed responses, and finally later this spring we will run trials with the LLMs responding to students.

Authorship

My focus in this project has been to develop the dialog policy and experiment with different LLMs that could be used to respond to students during collaboration tasks. I wrote the initial set of dialog state-dialog action mappings based off of sessions with the education experts and we are iteratively evaluating them to see how they perform in our lab environment. I also implemented the initial mappings into our JIA application so it can be used in the lab environment using a web-based chat interface. Presently, I am fine-tuning different large LLMs to see which one performs the best for supporting collaborative learning.

Methodology

The initial mappings of dialog states to actions is a straightforward set of if/else statements that checks whether the conversation is in a particular state based on thresholds of speaker-specific utterances and Collaborative Problem Solving codes. This system takes transcribed audio as input using Amazon Event Bridge and then pushes the result response from the JIA agent to a DynamoDB table. I am fine-tuning three types of LLMs: Falcon^1, INCITE^2, and Flan-T5^3 on the NCTE^4 corpus of math classroom transcripts. For each model, I am training with the student-teacher utterance pairs section of the corpus with the teacher response treated as the ‘gold standard’ for the model to learn. In addition to fine-tuning, I am also training the models for a conditioned variation where the model is given a desired output Dialog Act, or speaker intention, in addition to the student utterance to use to constrain the response to be more similar to how a teacher would respond to a given scenario. To evaluate the models before they go into the JIA interface in our lab setting, I am getting annotations on the generated responses from our test set on Fluency, Relevance, and Helpfulness. Additionally, when we provide a desired output dialog act for the model to use, we ask annotators to rate the response for adherence to the expected dialog act to make sure we are constraining the output appropriately.

Outcomes and Income

The design methodology used in this paper was accepted as a poster presentation to the 2024 AI4Ed Workshop co-located with AAAI. We have just finished the wizard-of-oz phase of the lab study and will start the programmed response phase in early March 2024 followed by the LLM-based response phase in May 2024. The LLM training is currently showing low scores from our human annotators, so I will continue to experiment with different model variations until we are confident that it would be helpful and safe to use with students in our lab and ultimately classroom setting.

Acknowledgements

This research was supported by the NSF National AI Institute for Student-AI Teaming (iSAT) under grant DRL 2019805. The opinions expressed are those of the authors and do not represent views of the NSF.

References

- The Falcon has landed in the Hugging Face ecosystem.

- Together. 2023. RedPajama-INCITE-3B, an LLM for everyone.

- Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Yunxuan Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, Albert Webson, Shixiang Shane Gu, Zhuyun Dai, Mirac Suzgun, Xinyun Chen, Aakanksha Chowdhery, Alex Castro-Ros, Marie Pellat, Kevin Robinson, et al. 2022. Scaling instruction-finetuned language models.

- Dorottya Demszky and Heather Hill. 2023. The NCTE Transcripts: A Dataset of Elementary Math Classroom Transcripts. In Ekaterina Kochmar, Jill Burstein, Andrea Horbach, Ronja Laarmann-Quante, Nitin Madnani, Anaïs Tack, Victoria Yaneva, Zheng Yuan, and Torsten Zesch, editors, Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023), pages 528–538, Toronto, Canada. Association for Computational Linguistics.